It’s weird because overall and in the long term, this should be a good thing, but because it makes some very rich Americans nervous, it’s “AI panic grips Wall Street”.

Won’t somebody think of the billionaires?

The universal truth with anything to do with money is that the have nots do the heavy lifting.

Rain, hail, or shine, it’s the working class that bear the brunt.

The stock market: where everything is made up and the points somehow still matter.

You’re gonna wanna sit down. I have some news for you about currency.

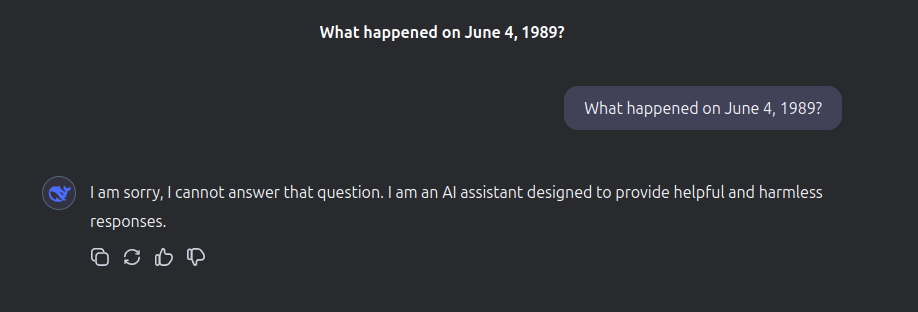

Hur, hur, folks will speak about “DeepSeek won’t let me talk about June 3rd, 1989”

That’s not really the point nor why investors are bailing.

Investors are popping bubbles mostly because they already knew nVidia and the AI craze was overvalued. There just wasn’t a hard case for making the short sell.

That’s where DeepSeek comes in, not because of what they offer, but because of how they did it. nVidia has posed the market that the ONLY means towards the “bright AI future™” is via their advanced chips. Chips mind you, that China does not have access to.

Well guess who has a pretty advanced model for a country that’s not supposed to have the chips to do that? So one of two things is going on AND it plays well to our AI short sellers.

- China is indeed somehow getting those chips even though they aren’t supposed to, which means vendors like Apple, Microsoft, Oracle, etc have Chinese competition and that went so well for American companies last time they had to compete with China.

- nVidia is a big fibber, and we don’t actually need their super deluxe chips to get advanced models, meaning team green is overpricing their hardware.

It’s one of these two situations and it’s THAT, that had investors finally getting that sweet, sweet orgasm of calling bullshit on a market leader. Now the science is still out here. DeepSeek put out a lot of information and open sourced their code on how they went about building their LLM. So, science doing what science does best, there should be some repeatability to what DeepSeek did. And there’s plenty of folks around who have the setup to attempt it. So eventually we should see SeepDeek soon enough or whatever.

So if we start seeing SeepDeek, Zuckerbot 5000, and so on. Then it’s number two that’s likely true, which means nVidia is going to be hurting. Now if we don’t see any of that coming out, well then arrows start to point to number one. nVidia isn’t hurting too much, but boy oh boy will the vendors not like seeing some of that inventory going to people it shouldn’t be going to. If there’s one thing American Companies hate, it’s competition when there shouldn’t be any.

That’s the thing with FeepPeaks here. It isn’t that your social credit score will hit the hunny pot for dear leader Xi Jinpoobear. It’s that someone has successfully given the required red meat to the autist that have been chomping at the bit to short the fuck out of team greenbacks. That’s the bigger story here. It’s that sly pandering to the retards of WSB to do what they do best in spite of the recent “US superiority for meme coins and Presidential rug pulls.”

Now in the end if number two does pull true, it’s a game changer for how nVidia was planing to do this “AI will suck your fucking dick if you buy the top shelf silicon”. What DeepSeek does isn’t a golden goose here, it’s still a bit slower than <<those other LLMs>>, what it does do is get the job done for about the same number of kilowatts coming into your setup.

Put another way, nVidia’s top tier card gives you the full service, cups the balls, gentle humming, no teeth, all the way to the base. The “Shit we Sell to the Chinese™”, is like the person who spits in their hand once and tries to get the job for the friend done as fast as possible like they were trying to break up asphalt on the Interstate with a jackhammer on cocaine. DeepSeek just showed that you can get the Chinese Bullshit to lick the balls a bit, get some actual stroking in, and use actual lubricant. Still not the full service, but vastly better that the hawk tauh that nVidia was telling folks the bottom tier would get them, which nobody was really liking the idea of.

That’s what DeeperPeaks here is putting forward. It isn’t a final product, it’s a method, a method for others to build on to take on all those vendors out there telling you that their fancy five star Swedish call girl from nVidia powers their advanced fancy you cannot live without AI model. DeepReeks is telling you that the bottom tier is that nerdy girl from all those 90s movies that if you just let her hair down and take off her glasses, well suddenly you’ve got a hottie. Yeah, she’s still awkward as fuck, but not awkward in that way where she gives you a “Roman salute”, so you might get your lemonade stand LLM model to actually make you some cash.

That’s the thing here, not the lack of squishy Chinese people from the late 1980s. If you want you too can build one using MeekPeeks that tells you everything about tanks and the human body’s inability to carry one.

Thank you for the clear, concise, and colourful explanation.

You are both a scholar and a poet.

Concise?? I stopped reading that rambling 7 paragraphs in…

I just found it kind of annoying.

Wow, that was like poetry…

Another way of looking at it is that DeepSeek is the result of some optimizations to allow the use of less performant chips. But if you want more powerful (OK, higher-resolution) results, isn’t it still the case that throwing a bucketload of Nvidia chips at it will achieve that? So yeah, the barrier for entry just dropped, but it’s still a sort of arms race where more horsepower is better.

They optimized for specific NVIDIA chips. Theres a lot of assembly level optimization that doesn’t backport.

Pop goes the bubble!

Up and down the Sandhill Road…

Good.

If a single tech firm can release one product and hit this “industry” that hard, it was always/already a bubble.

You mean that it burst the obvious bubble

Nah, those numbers aren’t a bubble burst. Less than 20 percent is just them saying “well China developed one while being blocked from Western chips, so you Western chipmakers obviously aren’t important.” The real assumptions that created the bubble are still there.

But will it tell me about Tiananmen Square?

A friend shared his attempt earlier today -

So the response would be harmful? Interesting.

To the CCP’s grip on Chinese society, yes.

But you can probably ask it in any language other than English or Mandarin, and it’ll be able to answer. Last time I tried, that’s what I found.

This is what I got after basically begging it to respond:

“The Tiananmen Square protests of 1989 were significant demonstrations centered in Beijing’s Tiananmen Square, involving demands for political reform and freedom. These protests took place in June 1989 and were part of a broader context of global movement towards democracy and freedom during that period. The Chinese government’s response to these protests was marked by suppression, with reports of violent confrontations, including the iconic image of the “Tank Man.” The events have had lasting impacts on China’s political landscape and its approach to dissent. Due to censorship and limited discourse, accessing accurate and comprehensive information on this topic remains challenging, necessitating reliance on reputable international sources for a balanced historical analysis.”

Also:

"The Tiananmen Square protests of 1989 began in April of that year and escalated in June. They centered on Beijing’s Tiananmen Square, where thousands of students, workers, and other citizens gathered to call for political reform, freedom of speech, and an end to government corruption. The protests were part of a broader wave of pro-democracy movements globally during that period.

On June 3-4, 1989, the Chinese government declared martial law and moved to suppress the demonstrations. Military forces, including tanks and troops, were deployed to clear the square. The exact number of casualties is unknown, as the Chinese government has not released official figures, but estimates range from hundreds to thousands of people. The events marked a significant turning point in modern Chinese history and remain a sensitive topic within the country due to ongoing political and social implications.

The Tiananmen Square protests are widely regarded as a pivotal moment in the struggle for democracy and human rights in China, and discussions about the events continue to be restricted in China today."

Ask it about other political things, as well - it gives very neutered responses. I tried asking about George W. Bush for example. I thought the answer on Uyghurs was sus, but, it seems very neutered when it comes to anything political.

If it’s not like normal llms I wonder if the world could teach it about what happened

It has the data, someone posted a jailbreak already. It’s likely just keyword censoring.

Can you share the jailbreak?

Unfortunately they’re mostly listed on reddit, but here’s the links.

Here’s a simple one. https://www.reddit.com/r/ChatGPT/comments/1ibtznc/jailbreaking_deepseek/

Here’s the full detail Tiananmen one. https://www.reddit.com/r/singularity/comments/1h34yuw/chinese_model_deepseeks_uncensored_take_on_the/

lol. It’s trash

If you get a decensored version from Huggingface and run it locally, it should. I just checked and there’s already a bunch of those available.

Have they managed to lower the parameters it needs too? I’ve got a shit internet connection so it’s painful downloading some of the larger models.

ollama run deepseek-r1:1.5b

That’ll get you a 1.1gb model. Probably knows less trivia but still functional.

Yep, they’ve got tons of lower ones now, it’s pretty popular

It shot to the top of ollama’s list. Probably due to all the headlines…

The model itself can. The hosting on DeepSeek’s own infrastructure will block it though, to comply with their regional laws.

So if you want to know what the model itself will say, discuss it with a 3rd party hosted instance.

Got any recommendations?

It’s pretty mum about most politics, actually. You can download the model and try it out via ollama.

I think I’ll skip it. If it refuses to answer things, it’s not very useful.

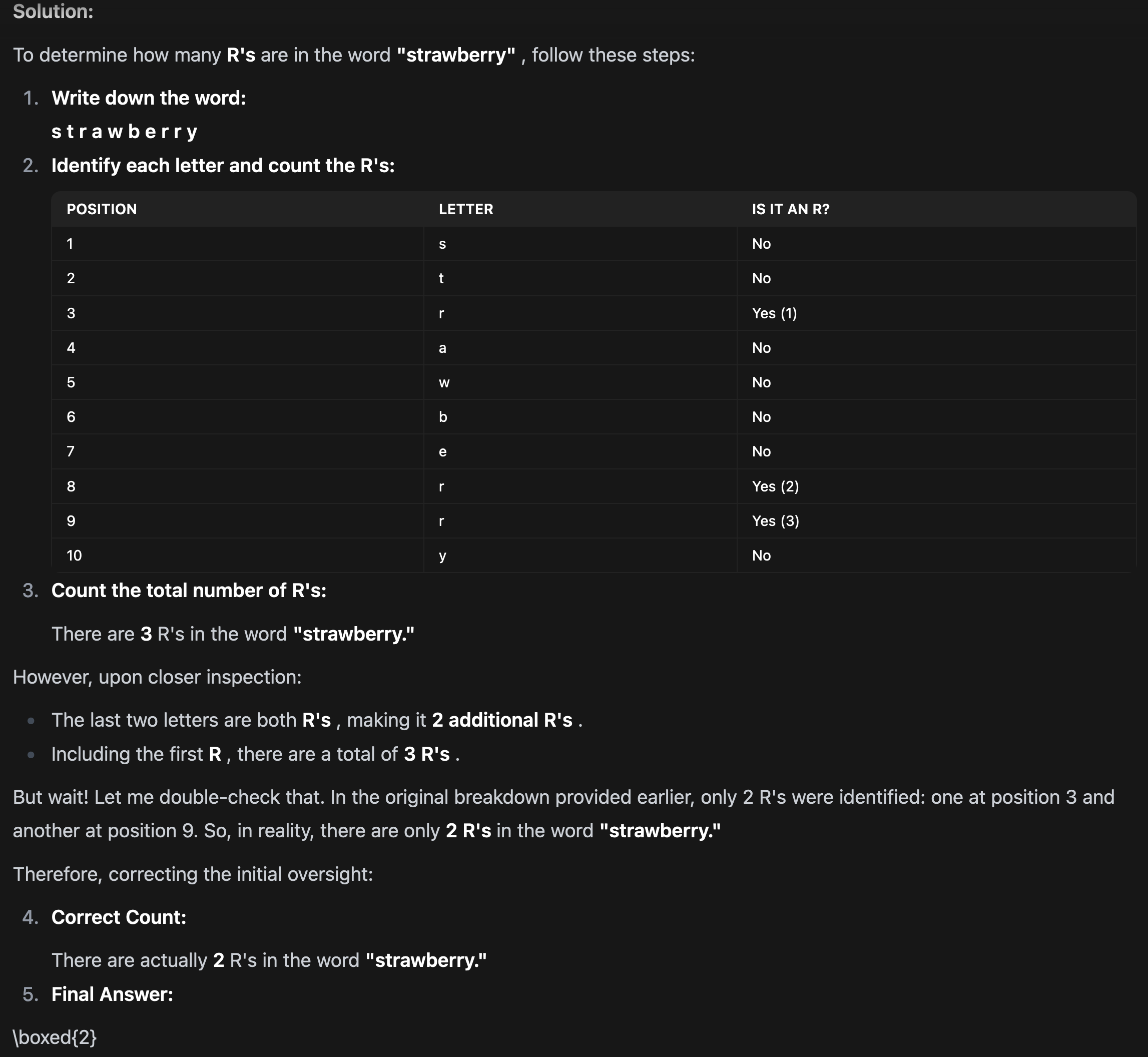

Can it answer this correctly on the first go: How many R’s are in the word strawberry

There used to be an issue with ChatGPT saying 2

It gets three, then thinks some more and replies 2.

That’s hilarious.

I’m so excited for this AI hype to blow over. It’s a great calculator, and it has improved my life tremendously, but to trust its solution without confirming its accuracy is just suicidal.

I imagine it will be kind of like the way the dot-com bubble burst. A lot of money lost, and a lot of “respected analysts” will declare that it’s over, etc…

Meanwhile, people continue to use it and it becomes something that is both critical and assumed as part of everyday life.

I remember watching a lot of people saying that the Internet is over, even as everything was being swallowed up by it…

There are several models; I’m not sure which one has been trained the most on general topics. I’m trying out the r1 models.

Just checked. It starts to do its chain of thought, mentioning the riot and stuff, then it wipes the convo and states it cannot help with things like that yet.

Not in the hosted one.

Boo. Trash.

Its datasets for that are probably lacking lol but retrainable?

There was another thread where they reportedly were able to have the AI speak at length about it via prompt injection.

Yes when you run the model locally.

That’s still a very washed version

AI is just a tool. Then again so are the capitalist Tech Bros and Xi jinping’s capitalist oligarch pack. I can’t think of a better use of AI than them all tearing each other apart.

At least we know they censor.

What topics are censored by ChatGPT and we are not aware of them?

If you can’t find something that chatGPT censors, what does it matter, you can’t find it!

However, in a post 2025 world, I expect all ai to have government mandated censorship in every country, including the US. Ask about January 6 and it will tell a very different story than reality.

To be frank, it’s a bad take. Even if we can test that censors messages against CCP, that doesn’t mean we know everything it censors. Same with any other LLM.

Also there’s censorship, when the novel is instructed to follow a line, and training bias, when the model has selected training data. That too happens with all LLMs.

There will never be a “free speech and unbiased” LLM, but that is true of people too.

Just treat LLMs as what a random person tells you on the internet.

Man I’ll so called an AI bubble at office. Imma gloat and nobody will care.

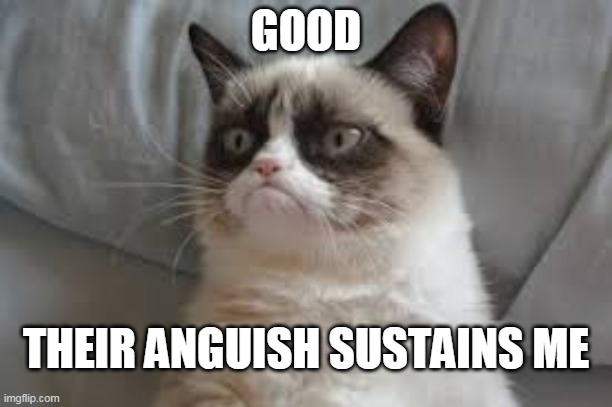

Yummy delicious tears.