Reject proprietary LLMs, tell people to “just llama it”

Ugh. Don’t get me started.

Most people don’t understand that the only thing it does is ‘put words together that usually go together’. It doesn’t know if something is right or wrong, just if it ‘sounds right’.

Now, if you throw in enough data, it’ll kinda sorta make sense with what it writes. But as soon as you try to verify the things it writes, it falls apart.

I once asked it to write a small article with a bit of history about my city and five interesting things to visit. In the history bit, it confused two people with similar names who lived 200 years apart. In the ‘things to visit’, it listed two museums by name that are hundreds of miles away. It invented another museum that does not exist. It also happily tells you to visit our Olympic stadium. While we do have a stadium, I can assure you we never hosted the Olympics. I’d remember that, as i’m older than said stadium.

The scary bit is: what it wrote was lovely. If you read it, you’d want to visit for sure. You’d have no clue that it was wholly wrong, because it sounds so confident.

AI has its uses. I’ve used it to rewrite a text that I already had and it does fine with tasks like that. Because you give it the correct info to work with.

Use the tool appropriately and it’s handy. Use it inappropriately and it’s a fucking menace to society.

I gave it a math problem to illustrate this and it got it wrong

If it can’t do that imagine adding nuance

Well, math is not really a language problem, so it’s understandable LLMs struggle with it more.

But it means it’s not “thinking” as the public perceives ai

Hmm, yeah, AI never really did think. I can’t argue with that.

It’s really strange now if I mentally zoom out a bit, that we have machines that are better at languange based reasoning than logic based (like math or coding).

deleted by creator

And then google to confirm the gpt answer isn’t total nonsense

I’ve had people tell me “Of course, I’ll verify the info if it’s important”, which implies that if the question isn’t important, they’ll just accept whatever ChatGPT gives them. They don’t care whether the answer is correct or not; they just want an answer.

That is a valid tactic for programming or how-to questions, provided you know not to unthinkingly drink bleach if it says to.

Have they? Don’t think I’ve heard that once and I work with people who use chat gpt themselves

I’m with you. Never heard that. Never.

Meanwhile Google search results:

- AI summary

- 2x “sponsored” result

- AI copy of Stackoverflow

- AI copy of Geeks4Geeks

- Geeks4Geeks (with AI article)

- the thing you actually searched for

- AI copy of AI copy of stackoverflow

Should we put bets on how long until chatgpt responds to anything with:

Great question, before i give you a response, let me show you this great video for a new product you’ll definitely want to check out!

Nah, it’ll be more subtle than that. Just like Brawno is full of the electrolytes plants crave, responses will be full of subtle product and brand references marketers crave. And A/B studies performed at massive scales in real-time on unwitting users and evaluated with other AIs will help them zero in on the most effective way to pepper those in for each personality type it can differentiate.

“Great question, before i give you a response, let me introduce you to raid shadow legends!”

Less than a year.

I say 6 months.

I give him 11 minutes.

And my axe!

Google search is literally fucking dogshit and the worst it has EVER been. I’m starting to think fucking duckduckgo (relies on Bing) gives better results at this point.

I have been using Duck for a few years now and I honestly prefer it to Google at this point. I’ll sometimes switch to Google if I don’t find anything on Duck, but that happens once every three or four months, if that.

I only go to the googs for maps.

I go to Apple Maps.

To this day any time I navigate somewhere with Google maps while someone else separately navigates there with apple maps, we end up at different places. More often than not, I’m where we both should’ve ended up.

Interesting. In my experience, google maps is “too creative” on their routes. They usually send me to some back roads that only make my drive much longer.

I suspect that Google Maps preemptively routes some percentage of drivers through alternate directions in order to ease congestion. (Because if Maps tells you that the obvious route will get you there in thirty minutes and it takes an hour then you’re going to be mad at Maps)

Regardless of their route choices, Maps is always solid with ETA for me and it has access to a ton of traffic data.

I can’t afford the water damage to the car.

Same.

The one thing Google still has over Duck for me at this point is reddit results. So much niche information is stored on that site, but they’ve blocked anyone other than Google from crawling the site so other engines can’t index past the point they changed that policy.

Same here. I only switch to google to search for images for memes. For some reason bing has a harder time finding random star trek scenes.

I use ddg but find Google gives better results and Google’s snippet feature still rocks.

Careful. People here get mad about that for some reason. Like you can’t think Google sucks but that their search engine is still better than others. And people will argue with you that Google is way worse than anything else. I don’t know what planet they are from.

I don’t like AI in search engines but even duckduckgo’s AI is better lol.

And they let you turn it off

I’m in sciences and the AI overview gives wrong answers ALL THE TIME. If students or god forbid professionals rely on it thats bad news.

Isn’t it funny that a lot of people were worried that wikipedia would be unreliable because anyone could edit it, then turned out pretty reliable, but AI is being pushed hard despite being even more unreliable than the worst speculation about wikipedia?

Being for profit excuses being shitty I guess.

AI is so fucking cap. There is no way to know if the information is accurate. It’s completely unreliable.

We have new feature, use it!

No, its broken and stupid, I prefer old feature.

… Fine!

breaks old feature even harder

I’ve used Google since 2004. I stopped using it this year because as the parent comment points out, it’s all marketing and AI. I like Qwant but it’s not perfect but it functions like a previous version of Google.

I have tried a few replacements for Google but I’ve yet to find anything remotely as effective for searches about things close to me. Like if I’m looking for a restaurant near me, kagi, startpage, and DDG are not good. Is qwant good for a use case like that? Haven’t heard about it before.

I’ve had some success but it goes off of your ISPs server location so for me it’s not very useful.

I have not enjoyed Qwant - tried it as my default but I’m back to DDG. I just want a functional Google again (boolean operators please…)

yeah, but at least we can vet that shit better that the unsourced and hallucinated drivel provided by ChatGPT

Even adding, “Reddit” after a search only brings up posts from 7 years ago.

The irony is that Gemini Pro is actually better than ChatGPT (which is not saying a ton, as OpenAI have completely stagnated and even some small open models are better now), but whatever they use for search is beyond horrible.

How long until ChatGPT starts responding “It’s been generally agreed that the answer to your question is to just ask ChatGPT”?

I’m somewhat surprised that ChatGPT has never replied with “just Google it, bruh!” considering how often that answer appears in its data set.

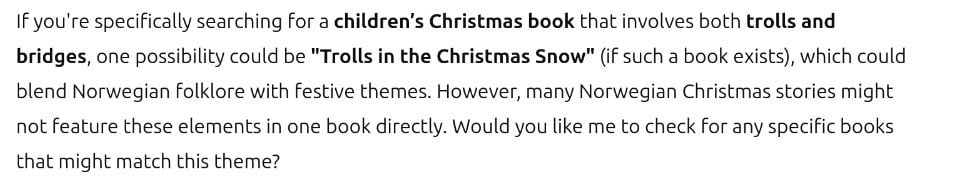

Last night, we tried to use chatGPT to identify a book that my wife remembers from her childhood.

It didn’t find the book, but instead gave us a title for a theoretical book that could be written that would match her description.

At least it said if it exists, instead of telling you when it was written (hallucinating)

Maybe it’s trying to motivate me to become a writer.

Maybe it’s trying to motivate me to become a writer.

Same happens every time I’ve tried to use it for search. Will be radioactive for this type of thing until someone figures that out. Quite frustrating, if they spent as much time on determining the difference between when a user wants objective information with citations as they do determining if the response breaks content guidelines, we might actually have something useful. Instead, we get AI slop.

Did you chatgpt this title?

“Infinitively” sounds like it could be a music album for a techno band.

The infinitive is the form of a verb that in English is said “to [x]”

For example, “to run” is the infinitive form of “run.”

OP probably meant “infinitely” worse.

OP should edit the post; or kill it if it can’t be edited

We’ll stand by to upvote the fix.

“Did you ChatGPT it?”

I wondered what language this would be an unintended insult in.

Then I chuckled when I ironically realized, it’s offensive in English, lmao.

Did you cat I farted it?

just call it cgpt for short

Computer Generated Partial Truths

Sadly, partial truths are an improvement over some sources these days.

Which is still better than “elementary truths that will quickly turn into shit I make up without warning”, which is where ChatGPT is and will forever be stuck at.

Both suck now.

I have to say, look it up online and verify your sources.

I say, “Just search it.” Not interested in being free advertising for Google.

“Let’s ask MULTIVAC!”

GPTs natural language processing is extremely helpful for simple questions that have historically been difficult to Google because they aren’t a concise concept.

The type of thing that is easy to ask but hard to create a search query for like tip of my tongue questions.

Google used to be amazing at this. You could literally search “who dat guy dat paint dem melty clocks” and get the right answer immediately.

I mean tbf you can still search “who DAT guy” and it will give you Salvador Dali in one of those boxes that show up before the search results.

The type of question where you don’t even know what you don’t know.

This is why so much research has been going into AI lately. The trend is already to not read articles or source material and base opinions off click bait headlines, so naturally relying on AI summaries and search results will soon come next. People will start to assume any generated response from a ‘trusted search ai’ is true, so there is a ton of value in getting an AI to give truthful and correct responses all of the time, and then be able to edit certain responses to inject whatever truth you want. Then you effectively control what truth is, and be able to selectively edit public opinion by manipulating what people are told is true. Right now we’re also being trained that AI may make things up and not be totally accurate- which gives those running the services a plausible excuse if caught manipulating responses.

I am not looking forward to arguing facts with people citing AI responses as their source for truth. I already know if I present source material contradicting them, they lack the ability to actually read and absorb the material.

This is entirely Google’s fault.

Google intentionally made search worse, but even if they want to make it better again, there’s very little they can do. The web itself is extremely low signal:noise, and it’s almost impossible to write an algorithm that lets the signal shine through (while also giving any search results back)

It would still be better if quality search (not extracting more and more money in the short term) was their goal.