It’s impressive how many times Valve goes “fine, I’ll do it myself”:

- This

- FPS becoming stale and called murder hallways

- Video games having piss poor support in Linux

to list but very few

- A controller designed for PCs

- Handheld PC-based portable console.

deleted by creator

I think they’re referring to the touch pads and how well they work as a replacement for a mouse. Way better than any other controller.

A controller designed for PCs

You mean the mouse and keyboard?

Pretty sure they meant a controller designed for use while not hunching over a desk

Keyboard and mouse are not responsible for your bad posture, you can over a joypad too.

I admit my response wasn’t particularly serious. I just meant to point out to that commenter that it’s not difficult to understand what the previous commenter meant.

Dual shock?

Others: Valve is pretty cool for making a dedicated PC controller that is a joy to use

You: BuT tHeY hAvE a DiFfReNt CoNtRoLeR dEdIcAtEd FoR sOmEtHiNg ElSe?!?1?

The PS5 controller feels right at home connected to my Steam Deck

I forgot Valve have Stockholmed a generation of G*mers.

And Sony hasn’t?

Come to think of it, why did Sony even bother designing a new controller for the playstation? Atari had that handled back in the 80’s so what good did they think they were doing.

EDIT: Holy hell I just realized how insane it was for Sholes, Soule, and Glidden to develop the QWERTY typewriter. We already had pens! What a waste of time!

🤓 The development of the Atari joystick to the NES / SNES controllers to the Sony Playstation controller was about being able to enable more easy-access control options. Flight sims on the Atari had to be very simple.

Curiously, mouse and keyboard (or for me trackball and keyboard) are so intuitive for looking around a 3D environment that I prefer it to the Sony config, but then it took years and years before a working config (involving easy selection, locking and auto-aim) could be made for FPSes.

Joysticks are also difficult to replace, and plenty of flight sims / space sims still allow for joystick controls when they are available. The problem there is that potentiometers which allow for gradient maneuvering tend to deteriorate quickly, so we’ve veered away from joystics to laser-tracked mice (which don’t depend on moving parts). /🤓

They made their own controller if that’s what you’re asking.

No, they mean this thing

It’s dead though isn’t it?

I believe there was a leak about a steam controller 2

Please let it be true, I have been praying that they would for so long. I have 2 of the original and love them.

god please let it be true.

Only because SCUF (and by extension their owners Corsair) are patent trolls and forced Valve to discontinue the Steam Controller due to the existence of back buttons. Valve eventually won the appeals but by then the SC was already out of production

It lives on in spirit in the Steamdeck and the HTC Vive wands.

Steamdeck actually has a second analogue stick and a D-pad though.

The lack of them on the old controller was painful and just made me swap back to a wired Xbox 360 pad. I can see the idea behind it, it’s just that games weren’t made for it.

Yeah, it’s a great controller overall but the lack of the second analog stick breaks it for a lot of games. It probably is the best one to use for games that don’t really support controllers in the first place.

Yeah, Steam Deck (with the back buttons, and the insane customization you can do with Steam Input) probably has the best controller.

But the haptics and adaptive triggers of the DualSense give it a slight edge imo. Unless your game uses a mouse, then it’s SD all the way.

Steam Controller 2 is coming

I have it.

A madkatz Xbox controller

The Y button sticks as is tradition

It looks like a Madkatz controller, you’re right, but it has some really cool features. The D-Pad and the right stick are replaced by two clickable touch surfaces, as to allow you a different type of control than just using two sticks. It also has an accelerometer and horoscope, which is also a nice thing to have when you want to aim in games almost as precisely as with a mouse. On top of that, there are also two extra buttons on the rear of the controller.

Also Wayland in Linux wasn’t improving as fast as they’d like so they’re creating their own development protocol for that too lol

The whole “don’t reinvent the wheel” mantra in software development is strong, and for very good reason. When a company basically does so, repeatedly, and are repeatedly successful at it, that truly is notable.

I guess that’s just your comment though, with more words and no examples lol

- steam input

Now, can we fix flashlights in games such that we don’t get a well defined circle of lit area surrounded by completely a black environment? Light doesn’t work that way, it bounces and scatters, meaning that a room with a light in it should almost never be completely dark. I always end up ignoring the “adjust the gamma until some wiggle is just visible” setup pages and just blow the gamma out until I can actually see a reasonable amount in the dark areas.

Yes, really dark places should be really dark. But, once you add a light to the situation, they should be a lot less dark.

Now, can we fix flashlights in games such that we don’t get a well defined circle of lit area surrounded by completely a black environment?

Sure, we can do that, but we won’t because of the narrative and functional in-game purpose of the flashlight. It’s not meant to be realistic, it’s meant to make the game feel a specific way.

It’s also why a flashlight may be able to run for dozens of hours straight on a single battery but in video games it’ll die in minutes if not seconds. Realism is unfair. Also the same reason why nuclear reactors in video games are always dangerous. Because representing them realistically would be boring to the average adrenaline junkie “gamer”.

We are now in the age of the continuous flashlight (though Subnautica has operational long-running, replaceable batteries). L4D in 2007 had permanent flashlights that worked pretty well and felt right.

Feels unnecessarily hyperbolic to call the average gamer an “adrenaline junkie”. Games need gameplay and fixing things that aren’t working, be it a dying flashlight or an erupting reactor, is easy and extensible gameplay.

I think you read that wrong. It is not that the average gamer is an adrenaline junky. The person takes a subgroup of gamers, the “adrenaline junkys”, and from that subgroup the average is meant.

The discussion was of a common trope in video games, the person I replied to referenced an unspecific element in video game storytelling, and you expect the primary understanding of the subsequent label to be talking to a sub-section of a sub-section of all gamers?

Either you are reading a far too charitable (and unrealistic) interpretation of the previous comment, or the original comment needs signficant revision.

Even if we take your reading as valid, how would the attention span of a minor fraction of all gamers move the needle, in terms of game design, enough to bring about the tropes previously discussed?

You just described ray tracing. The problem is, it’s incredibly computationally expensive. That’s why DLSS and FSR were created to try and make up for the slow framerates.

there’s more to dynamic global illumination than just ray tracing

Not in an ideal world. Ray tracing is how light actually works in real life. Everything we do with global illumination right now is a compromised workaround, since doing a lifelike amount of ray tracing in real time, at reasonable framerates, is still to much for our hardware.

Nothing about 3D animation is ideal. It’s all about reasonable approximations. Needing to build better GPUs to support tracing individual photons is insane when you could just slightly increase ambient lighting in the area of a light source.

The current flashlight implementation is a reasonable approximation, when the rest of the map is properly lit with very dim light.

I’ve seen an awesome “kludge” method where, instead of simulating billions of photons bouncing in billions of directions off every surface in the entire world, they are taking extremely low resolution cube map snapshots from the perspective of surfaces on a “one per square(area)” basis once every couple frames and blending between them over distance to inform the diffuse lighting of a scene as if it were ambient light mapping rather than direct light. Which is cool because not only can it represent the brightness of emissive textures, but it also makes it less necessary to manually fill scenes with manually placed key lights, fill lights, and backlights.

Light probes, but they don’t update well, because you have to render the world from their point of view frequently, so they’re not suited for dynamic environments

They don’t need to update well; they’re a compromise to achieve slightly more reactive lighting than ‘baked’ ambient lights. Perhaps one could describe it as ‘parbaked’. Only the ones directly affected by changes of scene conditions need to be updated, and some tentative precalculations for “likely” changes can be tackled in advance while pre-established probes contribute no additional process load because they aren’t being updated unless, as previously stated, something acts on them. IF direct light changes and “sticks” long enough to affect the probes, any perceived ‘lag’ in the light changes will be glossed over by the player’s brain as “oh, my characters’ eyes are adjusting, neat how they accommodated for that.”–even though it’s not actually intentional but rather a drawback of the technology’s limitations.

I am not educated enough to understand this comment

Shouldnt ray tracing fix that?

Yes, but it’s exponentially expensive compared to cheating.

Well they built entire video cards series to address that, I hope they were worth it.

We haven’t had decent GPU pricing since we started ramming that onto cards, although I will openly admit we’ve also had a lifetime of wacky fucking shit go on since then.

and it’s still incredibly computationally expensive

yes and no, ray tracing still requires decisions about fidelity, the same poor decisions can be made in either rendering system

That’s exactly the sort of thing his work improved. He figured out that graphics hardware assumed all lighting intensities were linear when in fact it scaled dramatically as the RGB value increased.

Example: Red value is 128 out of 255 should be 50% of the maximum brightness, that’s what the graphics cards and likely the programmers assumed, but the actual output was 22% brightness.

So you would have areas that were extremely bright immediately cut off into areas that were extremely dark.

Unreal Engine 5.5 Lumen supposedly does it well with the light scattering.

deleted by creator

“It’s a bit technical,” begins Birdwell, "but the simple version is that graphics cards at the time always stored RGB textures and even displayed everything as non linear intensities, meaning that an 8 bit RGB value of 128 encodes a pixel that’s about 22% as bright as a value of 255, but the graphics hardware was doing lighting calculations as though everything was linear.

“The net result was that lighting always looked off. If you were trying to shade something that was curved, the dimming due to the surface angle aiming away from the light source would get darker way too quickly. Just like the example above, something that was supposed to end up looking 50% as bright as full intensity ended up looking only 22% as bright on the display. It looked very unnatural, instead of a nice curve everything was shaded way too extreme, rounded shapes looked oddly exaggerated and there wasn’t any way to get things to work in the general case.”

Made harder by brightness being perceived non - linearly. We can detect a change in brightness much easier in a dark area than a light- if the RGB value is 10%, and shifts to 15%, we’ll notice it. But if it’s 80% and shifts to 85%, we probably won’t.

Reminds me of a video I watched where they tested human perception of 60/90/120 FPS. They had an all white screen and flashed a single frame of black at various frame rates, and participants would press a button if they saw the change. Then repeated for an all black screen and a single frame of white would flash.

At 60 and 90 FPS, most participants saw the flash. At 120 FPS, they only saw the change going from black to white.

Sick!

The guy was a fine arts major too. Bunch of stem majors couldn’t figure it out for themselves.

As a person who tends to come from the technical side, this doesn’t surprise me. Without a deep understanding of the subject, how would you come up with an accurate formula or algorithm to meet your needs? And with lighting in a physical space, that would be an arts major, not a math or computing major.

Most of my best work (which I’ll grant is limited) came from working with experts in their field and producing systems that matched their perspectives. I don’t think this diminishes his accomplishments, bit rather emphasizes the importance of seeking out those experts and gaining an understanding of the subject matter you’re working with to produce better results.

Yeah. I have a STEM degree and work in a STEM field, and it’s a bummer to see just how… not well rounded people’s educations are. Otherwise intelligent people that seem incapable of critical thinking.

Justice!

Fixing anything in industry is like fighting a very big, very lazy elephant seal bull

“Just code around it. This is the way it has always been done, and the way it always will be done.”

Hardware takes a long time to change.

A temporary patch while we wait for hardware is the way to go.

Nothing is more permanent than a temporary fix.

They already fixed it in software we don’t need to prioritize the hardware fix.

Like getting devs to stop using TAA

“the garbage trend is to produce a noisy technique and then trying to “fix” it with TAA. it’s not a TAA problem, it’s a noisy garbage technique problem…if you remove TAA from from a ghosty renderer, you have no alternative of what to replace it with, because the image will be so noisy that no single-shot denoiser can handle it anyway. so fundamentally it’s a problem with the renderer that produced the noisy image in the first place, not a problem with TAA that denoised it temporally”

(this was Alexander Sannikov (a Path of Exile graphics dev) in an argument/discussion with Threat Interactive on the Radiance Cascades discord server, if anyone’s interested)

Anyways, it’s really easier said than done to “just have a less noisy technique”. Most of the time, it comes down to this choice: would you like worse, blobbier lighting and shadows, or would you like a little bit of blurriness when you’re moving? Screen resolution keeps getting higher, and temporal techniques such as DLSS keep getting more popular, so I think you’ll find that more and more people are going to go with the TAA option.

I’ll take worse anything over a blurry vaseline smeared image during motion. The fact that devs of high speed games like shooters think this is the most acceptable compromise is bewildering.

What’s TAA?

antialiasing and denoising through temporal reprojection (using data from multiple frames)

it works pretty well imo but makes things slightly blurry when the camera moves, it really depends on the person how much it bothers you

its in a lot of games because their reflections/shadows/ambient occlusion/hair rendering etc needs it, its generally cheaper than MSAA (taking multiple samples on the edges of objects), it can denoise specular reflections, and it works much more consistently than SMAA or FXAA

modern upscalers (DLSS, FSR, XeSS) basically are a more advanced form of taa, intended for upscaling, and use the ai cores built into modern gpus. They have all of the advantages (denoising, antialiasing) of taa, but also generally show blurriness in motion.

Interesting read.

Now can somebody please fix the horrible volume sliders on everything.

I just max all the volume sliders and use voicemeeter to control the volume levels of the different audio sinks. Although I guess that doesn’t help if you’re trying to leave game sfx audio at max volume but lower in game music or something.

Started doing this and I’ll never go back.

Go on

I’m impressed by how well my Steam Deck plays even unsupported games like Estival Versus.

I just wish they bundled it with a browser. Any browser even Internet Explorer would have been better than an “Install Firefox” icon that takes me to a broken store. I had to search for and enter cryptic commands to get started. I’m familiar with Debian distros and APT, what the hell is a Flatpak? Imagine coming from Windows or macOS and your first experience of Linux is the store bring broken. It was frustrating for me because even with 20 years of using and installing Linux, at least the base installation always comes with some functioning browser even if the repos are out of sync or dead.

The most recent version of Steam on the Deck addresses this if you go to the “Non-Steam Game” page. It gives you the option to install Chrome and add it as a non-steam shortcut with one click. It also has a short note about how you can easily do it with any app in Desktop Mode, and that they’re working on a way to do that within the Gaming Mode interface.

Not ideal, but they’re working on it. Also, would prefer Firefox obv.

What store was broken?

Edit: I ask which store, because while I prefer CLI for updating and maintaining packages on my main device, the Discover store has always worked perfectly fine for me. And if you’re using it on the Steam Deck level (SteamOS is immutable), then you don’t really need to understand what a Flatpak is any more than you understand what an .exe is. Think if them as literally the same thing for your purposes.

And if anything, maintaining and updating programs is way easier than Windows as it does it all automatically.

there is chrome now

Yep. Now imagine it happening in the space program, because it totally does

I think modern graphics cards are programmable enough that getting the gamma correction right is on the devs now. Which is why its commonly wrong (not in video games and engines, they mostly know what they’re doing). Windows image viewer, imageglass, firefox, and even blender do the color blending in images without gamma correction (For its actual rendering, Blender does things properly in the XYZ color space, its just the image sampling that’s different, and only in Cycles). It’s basically the standard, even though it leads to these weird zooming effects on pixel-perfect images as well as color darkening and weird hue shifts, while being imperceptibly different in all other cases.

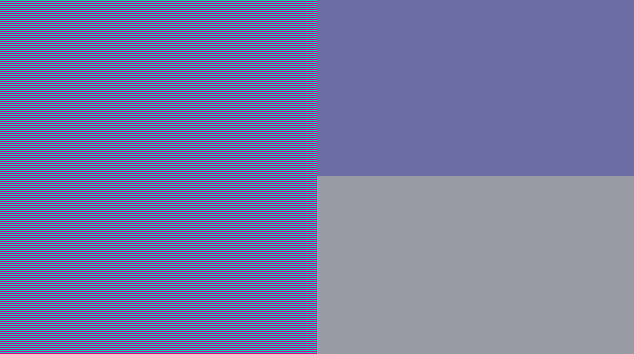

If you want to test a program yourself, use this image:

Try zooming in and out. Even if the image is scaled, the left side should look the same as the bottom of the right side, not the top. It should also look roughly like the same color regardless of its scale (excluding some moire patterns).

On that web page, the left side is similar to the bottom, but on Lemmy, the left side looks purple like the top right. The bottom right appears grey to me. Is that supposed to happen?

Same experience here. On the website, the left side never fully matched either shade on the right side to me, appearing closer to the bottom but always somewhere in between most of the time. But when I view the screenshot linked above in my mobile app for Lemmy, it looks a lot closer to the top than the bottom, but varies a bit based on how much I zoom in (almost like moire patterns).

On, Lemmy the image is definitely not producing the same effect as on the website. On the phone, I can do incremental zooms of the page. If I zoom very lightly there is a sweet spot where it looks exactly like the top, but fully zoomed out(the default view) it is looking the same as the bottom. Clearly there is a problem with scaling the image unlike what /u/AdrianTheFrog said

People here have no idea how any of this works, or why the lighting was off.

CRT monitors did not display light intensity linearly. Remember gamma ? That was it, gamma correction. Gpu chips at the time practically had to have that in. And it didn’t even matter that much if it was a bit off because our eyes are not linear. Like remember quake ? Nobody cared quake was not color accurate.

The gpu manufacturers knew it all, be it nvidia, ati, 3dfx. Color spaces were well known, and nobody had a color accurate monitor at home anyway. Even today you can buy a monitor that’s way off.

Maybe that guy did get them to care more about it, but I can not read such a “hateful” article to make a conclusion (I did skim it).

Anyway none of it matters now when color is in 32bit floats and all the APIs support multiple color spaces.