You’re right. I got lazy.

Data Science

- 202 Posts

- 748 Comments

1·17 days ago

1·17 days agoI’d make a blind bet on that over Matrix for suitability.

1·17 days ago

1·17 days agoIn that case we could all just use email.

13·17 days ago

13·17 days agoThat doesn’t explain why they don’t start a transition by posting to both the new platform and the old. And not including links to their new account on their websites.

31·17 days ago

31·17 days agoMatrix and XMPP don’t even pretend to be a Discord replacement.

41·17 days ago

41·17 days agoUnfortunately the accounts listed under Social network accounts of Debian teams and Social network accounts of Debian contributors are almost exclusively Twitter accounts.

3·17 days ago

3·17 days agoUnfortunately the accounts listed under Social network accounts of Debian teams and Social network accounts of Debian contributors are almost exclusively Twitter accounts.

4·17 days ago

4·17 days agoUnfortunately the accounts listed under Social network accounts of Debian teams and Social network accounts of Debian contributors are almost exclusively Twitter accounts.

17·19 days ago

17·19 days agoFrom the article:

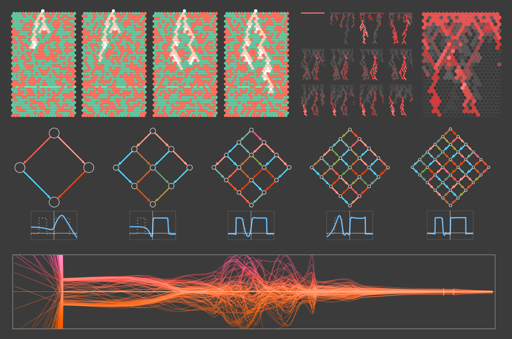

DeepSeek-R1 release leaves open several questions about:

- Data collection: How were the reasoning-specific datasets curated?

- Model training: No training code was released by DeepSeek, so it is unknown which hyperparameters work best and how they differ across different model families and scales.

- Scaling laws: What are the compute and data trade-offs in training reasoning models?

These questions prompted us to launch the Open-R1 project, an initiative to systematically reconstruct DeepSeek-R1’s data and training pipeline, validate its claims, and push the boundaries of open reasoning models. By building Open-R1, we aim to provide transparency on how reinforcement learning can enhance reasoning, share reproducible insights with the open-source community, and create a foundation for future models to leverage these techniques.

In this blog post we take a look at key ingredients behind DeepSeek-R1, which parts we plan to replicate, and how to contribute to the Open-R1 project

16·23 days ago

16·23 days agoI didn’t read your post correctly. Yeah, that’s harassment at the very least. No better than someone screaming at a retail worker because of some corporate policies.

19·24 days ago

19·24 days agoIt’s all about your organization’s size and if the organization makes use of the Anaconda controlled

defaultschannel. I’m not a lawyer, but your company may be liable for some licensing fee if your company is using Anaconda’s repository of binaries. You’d need to consult with an actual lawyer for more reliable assessment of your potential liability.Switch to using miniforge and the conda-forge channel when installing and using Conda.

4·24 days ago

4·24 days agoConda itself is outside of Anaconda, Inc’s control.

3·24 days ago

3·24 days agoMiniforge should be defaulting to use conda-forge. Perhaps an old installation is configured to use the Anaconda inc maintained

defaultschannel.Conda-forge.org provides a guide to rid your environments of the

defaultschannel.https://conda-forge.org/docs/user/transitioning_from_defaults/

27·1 month ago

27·1 month agoIt’s better that you don’t use resume driven decisions. Just do whatever you are interested in.

13·1 month ago

13·1 month agoEmbedded software development has dramatically advanced over the past decade. What does that mean for bare-metal programming?

At a Glance

- Bare-metal programming is an essential skill as it enables you to understand what your system is doing at the lowest levels.

- Even if you spend your days working with abstraction layers, bare-metal programming will guide you should abstractions fail.

- And bare-metal skills can provide a solid foundation for troubleshooting and debugging.

2·3 months ago

2·3 months agoThese two are my favorite balance of fundamentals and getting to purposeful application as quickly as possible (the first link is definitely not enough, but combined with the second she should be comfortable with the syntax and able to get basic things working):

https://www.kaggle.com/learn/intro-to-programming

https://www.kaggle.com/learn/pythonThis one takes its time with fundamentals and includes some projects to put them in context of building something. It’s presented on Google Colab and Jupyter notebooks: https://allendowney.github.io/ThinkPython/

Working with GIS data means cleaning data. This one covers that and a lot of common analysis tools and techniques. But it assumes a bit of programming knowledge (Good to follow up after one of the options above): https://wesmckinney.com/book/

5·4 months ago

5·4 months agoFYI - The URL in the post is:

https://github.com/Dark-Alex-17/managarrlBut the correct URL is:

https://github.com/Dark-Alex-17/managarr

1·4 months ago

1·4 months agoPaste from clipboard is different than paste from primary selection

I’m talking about posting on their website a link to alternative social media accounts.