- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- [email protected]

iPhones have been exposing your unique MAC despite Apple’s promises otherwise — “From the get-go, this feature was useless,” researcher says of feature put into iOS 14::“From the get-go, this feature was useless,” researcher says of feature put into iOS 14.

tl;dr It was a bug. It is fixed in 17.1.

this is whitewashing Apple. It was introduced in iOS 14. A trillion dollar company like apple should have had this fixed long before.

Lol, and Apple didn’t even “discover” it themselves. It was 2 unaffiliated security researchers who did. Who knows if they even implemented any logic besides the UI.

If you had read the article, you would have known that the bug relates to a very specific field inside a multicast payload and a network-specific unique MAC address is generated and retained as advertised. I’m not defending Apple; just reiterating the facts.

The way multicast works is that the destination mac address starts with 01 00 5e and then next 3 octets (mac addresses are 6 octets long) are copied from the IP address lower octets. The mac address is always this when building the L2 headers for the packet.

It’s not specified what precisely is provided in the payload of the multicast body. I suspect that the original MAC address is included in something like a Bonjour broadcast, but I wasn’t able to find any documentation that confirms that.

apple should have had this fixed long before

not if it was intentional. I mean apple bends over for authoritarian governments around the world. This could easily be used as a state surveillance apparatus and casually “fixed” when discovered down the road as a “bug”.

yeah I agree that it was intentional. I can’t believe Apple didn’t properly test this feature. But I didn’t want to speculate without actual proof

Why not? Everyone else seems to be doing it, you’re probably just some Portuguese pastrie chef with a bad hair cut and a paid off mortgage

Hmm, tldr bot didn’t mention this…

This is why we call it artificial intelligence, rather than digital intelligence.

This is the best summary I could come up with:

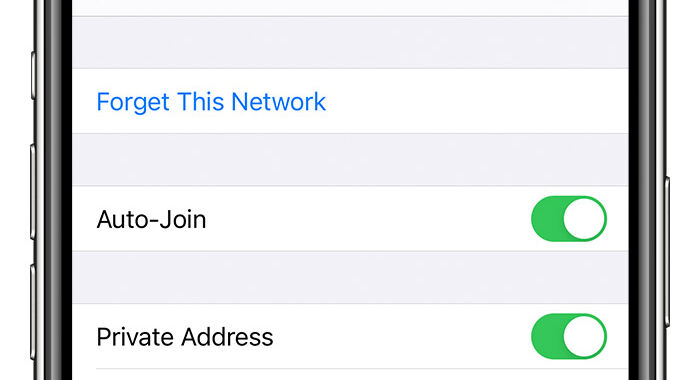

Three years ago, Apple introduced a privacy-enhancing feature that hid the Wi-Fi address of iPhones and iPads when they joined a network.

Enter CreepyDOL, a low-cost, distributed network of Wi-Fi sensors that stalks people as they move about neighborhoods or even entire cities.

In 2020, Apple released iOS 14 with a feature that, by default, hid Wi-Fi MACs when devices connected to a network.

Over time, Apple has enhanced the feature, for instance, by allowing users to assign a new private Wi-Fi address for a given SSID.

In fairness to Apple, the feature wasn’t useless, because it did prevent passive sniffing by devices such as the above-referended CreepyDOL.

But the failure to remove the real MAC from the port 5353/UDP still meant that anyone connected to a network could pull the unique identifier with no trouble.

The original article contains 680 words, the summary contains 136 words. Saved 80%. I’m a bot and I’m open source!

Very useful tech for schools, supermarkets and malls. I wonder if Apple will patch the 5353 port issue.

I don’t understand why this article isn’t BS. It was meant to prevent passive snooping. If I connect to a network, it needs to know who I am.

I’ve worked with companies that implement this type of tech for monitoring road traffic congestion. IOS reduced the number of ‘saw same phone twice and can calculate speed’

You are who you say you are to a network though, at least at layer 2.

If you say you’re one MAC address one time and another next time then so you are.

Let me give you an example. Let’s say I’m a device trying to connect to a network. Among other things I tell it “can I have an IP address, my MAC address is Majestic”. It says in turn, sure and notes down Majestic and routes or switches things to me when another device says it wants to reach my IP. In Wi-Fi it basically shouts out it has a packet for Majestic and sends it out onto the air with my unique encryption key I previously negotiated and I am listening for packets for Majestic and grab and process that packet. Now if I go back and connect again and call myself Dull it’ll do the same thing. Those names being stand-ins for MAC addresses of course.

This is simplified of course. And this is why MAC whitelisting is a futile attempt at security.

Get a Google Pixel. Use GrapheneOS.

Step 1: Give the surveillance company money

deleted by creator

“full stop”

The Murena 2 doesn’t have a secure element like Pixels do with their Titan M chip. That is also the reason why the Graphene devs don’t bother with porting their OS to phones like the Fairphone or Murena. The only Android phone that can be as secure if not more secure than an iPhone is the Google Pixel running GrapheneOS. Edit: You can watch this video to learn why a secure element is important for maintaining good security: https://piped.video/watch?v=yTeAFoQnQPo

The Titan M chip is a Trusted Platform Module. The pixel phone isn’t the only one to have that. How To Geek has a simple explanation. Stock Android can take advantage of it from the get go thanks to the hardware backed keystore.

Verified boot is not Google Pixel related either. It’s been there since Android 4.4. It isn’t hardware related either as standard PCs have something similar: UEFI which allows secure boot. Here’s a great article on how it works with linux.

The rest of the video focuses on software related security, not hardware. I find it very hard to believe that no other vendor doesn’t fulfill the specs required for GrapheneOS. Honestly, I believe the devs just want to limit the amount of work they have for themselves, which is fine, but they don’t have to go to the lengths of claiming “Google is the only vendor to make secure hardware”. That just doesn’t seem believable at all.

AFAIK the Titan M series is by far the strongest implementation that can be found in a phone. I’m not aware of any other commercially available chip that has support for so many security features like Insider Attack resistance, the Weaver API, Android Strongbox, etc. Also, there are still many phones on the market, that don’t have a secure element at all.

deleted by creator

Better than giving them your data. They can’t use your money to ruin your life, but they can very well do that with your data.

You’re kidding right? Money can’t ruin lives?

It definitely can. But Google won’t randomly spend money in order to ruin your life. They will do that with your data though as can be seen in many unfortunate cases like these: https://www.phoenixnewtimes.com/news/google-geofence-location-data-avondale-wrongful-arrest-molina-gaeta-11426374 https://www.nytimes.com/2022/08/21/technology/google-surveillance-toddler-photo.html And it’s not just Google. Any data you expose can and will at some point be used to absolutely fuck you. The Snowden leaks have proven this.

That’s a direct impact, for sure, but money gives them power to build better surveillance, influence the public, influence politics, buy up competition and so much more. They affect you indirectly and over a much longer time-period.

Check out Swappa

Pixels are too expensive. And you will support private data hungry evil company.

Compared to Apple?

Compared to the other Android-based phone manufacturers.

Privacy shouldn’t be so expensive.

Pixels are too expensive.

My Pixel 6a was 300 bucks, it’s not that expensive and I get security updates until 2027. With 8th Generation Pixels even getting 7 years of updates.

And you will support private data hungry evil company.

Pixels are the only phones that allow you to fully erase everything related to Google and at the same time keep good security.