As you all might be aware VMware is hiking prices again. (Surprise to no one)

Right now Hyper-V seems to be the most popular choice and Proxmox appears to be the runner up. Hyper-V is probably the best for Windows shops but my concern is that it will just become Azure tied at some point. I could be wrong but somehow I don’t trust Microsoft to not screw everyone over. They already deprecated WSUS which is a pretty popular tool for Windows environments.

Proxmox seems to be a great alternative that many people are jumping on. It is still missing some bigger features but things like the data center manager are in the pipeline. However, I think many people (especially VMware admins) are fundamentally misunderstanding it.

Proxmox is not that unique and is built on Foss. You could probably put together a Proxmox like system without completely being over your head. It is just KVM libvirt/qemu and corosync along with some other stuff like ZFS.

What Proxmox does provide is convenience and reliability. It takes time to make a system and you are responsible when things go wrong. Doing the DIY method is a good exercise but not something you want to run in prod unless you have the proper staff and skillset.

And there is where the problem lies. There are companies are coming from a Windows/point in click background who don’t have staff that understand Linux. Proxmox is just Debian under the hood so it is vulnerable to all the same issues. You can install updates with the GUI but if you don’t understand how Linux packaging works you may end up with a situation where you blow off your own foot. Same goes for networking and filesystems. To effectively maintain a Proxmox environment you need expertise. Proxmox makes it very easy to switch to cowboy mode and break the system. It is very flexible but you must be very wary of making changes to the hypervisor as that’s the foundation for everything else.

I personally wish Proxmox would serious consider a immutable architecture. TrueNAS already does this and it would be nice to have a solid update system. They would do a stand alone OS image or they could use something based on OStree. Maybe even build in a update manager that can update each node and check the health.

Just my thoughts

I think you are looking at this wrong. Proxmox is not prod ready yet, but it is improving and the market is pushing the incumbent services into crappier service for higher prices. Broadcom is making VMware dip below the RoI threshold, and Hyper-v will not survive when it is dragging customers away from the Azure cash cow. The advantage of proxmox is that it will persist after the traditional incumbents are afterthoughts (think xenserver). That’s why it is a great option for the homelab or lab environment with previous gen hardware . Proxmox is missing huge features…vms hang unpredictably if you migrate vms across hosts with different CPU architectures (Intel -> AMD), there is no cluster-wide startup order, and things like DRS equivalents are still separate plugins. That being said knowing it now and submitting feedback or patches positions you to have a solution when MS and Broadcom price you out of on-prem.

Hyper-v will not survive when it is dragging customers away from the Azure cash cow

Pretty sure that’s why they made Azure Stack HCI, it’s hyper-v, but doesn’t work without an up to date azure subscription and charges you monthly fees to run vms on hardware you own.

It’s great, the worst of both worlds… Fucking thing doesn’t even report on disk provisioned, only utilization, so get fucked it you want to capacity plan without writing your own report script.

Proxmox has those features. Also I personally wouldn’t mix CPU archs but you should be able to as it is all KVM. Maybe there is a different memory layout or something

I’m battling this right now; it SHOULD work but does not work consistently. Again, homelab, not ideal environment. I’m going from 2 R710’s with Xeons to a 3-node cluster with the 710’s and an EPYC R6525. Sometimes VM’s migrate fine, sometimes they hang and have to be full reset. Ultimately this was fine as I didn’t migrate much, but then I slapped on a DRS-like thing, and I see it more. I’ve been collecting logs and submitting diagnostics; even pegging the VM’s to a common CPU arch didn’t fix it.

To that end, DRS alternatives are still mostly plugins. This was the go-to, but then it was abandoned:

https://github.com/cvk98/Proxmox-load-balancer

And now I’m getting ready to go deeper into this, but I want to resolve the migration hangs first:

Proxmox has load balancing capabilities built in. You can just toggle it on and Proxmox will level everything out. However, if you are having issues with VMs hanging I would get that resolved first.

I’ve never done a live transfer between AMD and Intel so maybe there is more to the story. Make sure you get on the Proxmox forms as that’s where the developers hang out.

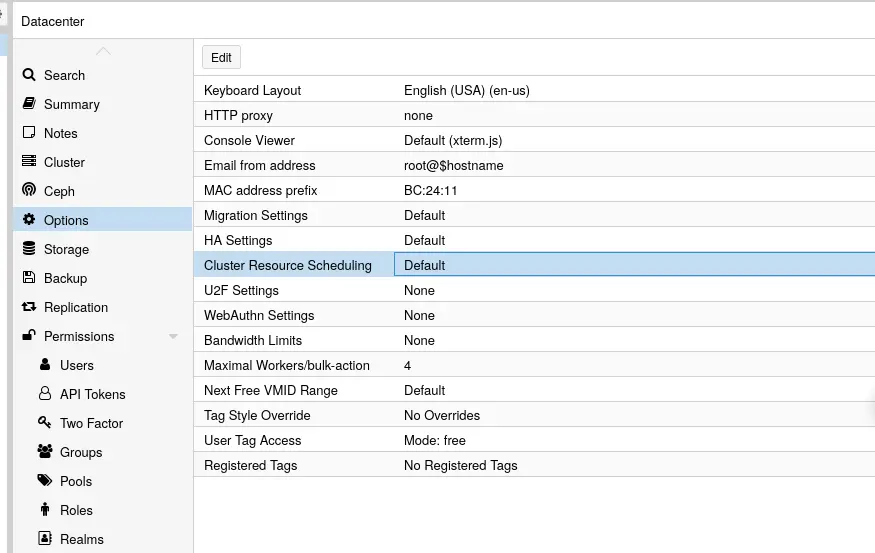

Where do you see the load balancing feature? Searching for exactly that was what got me to ProxLB. I have HA groups and fences, but that’s less resource allocation than failure resolution in my experience. My cluster is 8.2.7.

I posted to the forums, but I got a “YMMV” kind of answer; the docs say it’s technically unsupported: https://pve.proxmox.com/pve-docs/chapter-qm.html#_requirements

The hosts have CPUs from the same vendor with similar capabilities. Different vendor might work depending on the actual models and VMs CPU type configured, but it cannot be guaranteed - so please test before deploying such a setup in production.

I’m setting the CPU Type to x86-64-v2-AES which is the highest my westmere CPU’s can do. I have a path to getting all 3 nodes to the 6525 hardware, pending some budget and some decomm’s at work.

I’ve read extensively about that, and this thread was very helpful, and my understanding is that’s still not really a DRS equivalent, but more of a recovery mode: https://forum.proxmox.com/threads/ha-cluster-resource-scheduling-filling-in-the-missing-pieces-from-the-docs.139187/

Yeah I just know it goes beepity boop and then the VMs move around. My deployment is also pretty small so it really doesn’t matter for me.

deleted by creator

I suppose thats the difference between Windows admins and Linux admins. Windows admins are used to click their way though things with fancy gui’s and wizards :)

I’ve been using Proxmox professionally for years now, and not once did i have s problem i could not fix myself. But i’m used to solve problems myself by “digging in deep”. Ofcourse it helps that i’ve been using Linux (mostly Debian) since 1996. There are plenty of guides around to teach how Debian works. Windows admin’s who make the switch just need to take the time to read them, watch the video’s, do the research.

The only skills we where born with are the basic survival skills. Everything else is learned along the way. So was using Windows, and so is using Linux.

But thats just my opinion :)

The problem isn’t necessarily the GUI. The problem is there are a lot of admins who don’t understand what is happening under the hood. I’ve talking to people of all ages who have no understanding of how basic things like networking work. I’ve also talked to to people of all ages that have a deep understanding of the system.

The biggest takeaway is that to be a good admin you need to understand the details. Don’t be afraid to dig in. Either dig in, move to management or both

Exactly! :)

I manage macOS, Linux AND Windows. In the end, they’re all the same. Its software that needs management.

I’ve been using Proxmox professionally for years now, and not once did i have s problem i could not fix myself.

And how many of the environments you have left behind became an unmanageable mess when the company couldn’t hire someone with your skillset? One of the downsides to this sort of DIY infrastructure is that it creates a major dependency on a specific skillset. That isn’t always bad, but it does create a risk which business continuity planning must take into account. This is why things like OpenShift or even VMWare tend to exist (and be expensive). If your wunderkind admin leaves for greener pastures, your infrastructure isn’t at risk if you cannot hire another one. The major, paid for, options tend to have support you can reach out to and you are more likely to find admins who can maintain them. It sucks, because it means that the big products stay big, because they are big. But, the reality of a business is that continuity in the face of staff turnover is worth the licensing costs.

This line, from the OP’s post, is kind of telling as to why many businesses choose not to run Proxmox in production:

It is just KVM libvirt/qemu and corosync along with some other stuff like ZFS.

Sure, none of those technologies are magic; but, when one of them decides to fuck off for the day, if your admin isn’t really knowledgeable about all of them and how they interact, the business is looking at serious downtime. Hell, my current employer is facing this right now with a Graylog infrastructure. Someone set it up, and it worked quite well, a lot of years ago. That person left the company and no one else had the knowledge, skills or time to maintain it. Now that my team (Security) is actually asking questions about the logs its supposed to provide, we realize that the neglect is causing problems and no one knows what to do with it. Our solution? Ya, we’re moving all of that logging into Splunk. And boy howdy is that going to cost a lot. But, it means that we actually have the logs we need, when we need them (Security tends to be pissy about that sort of thing). And we’re not reliant on always having someone with Graylog knowledge. Sure, we always need someone with Splunk knowledge. But, that’s a much easier ask. Splunk admins are much more common and probably cheaper. We’re also a large enough customer that we have a dedicated rep from Splunk whom we can email with a “halp, it fell over and we can’t get it up” and have Splunk engineers on the line in short order. That alone is worth the cost.

It’s not that I don’t think that Proxmox or Open Source Software (OSS) has a place in an enterprise environment. One of my current projects is all about Linux on the desktop (IT is so not getting the test laptop back. It’s mine now, this is what I’m going to use for work.). But, using OSS often carries special risks which the business needs to take into account. And when faced with those risks, the RoI may just not be there for using OSS. Because, when the numbers get run, having software which can be maintained by those Windows admins who are “used to click their way though things” might just be cheaper in the long run.

So ya, I agree with the OP. Proxmox is a cool option. And for some businesses, it will make financial sense to take on the risks of running a special snowflake infrastructure for VMs. But, for a lot of businesses, the risks of being very reliant on that one person who “not once [had a] problem i could not fix myself”, just isn’t going to be worth taking.

Proxmox earns most of its money by giving proffessional support.

If this is your concern, then you can buy support directly from Proxmox, just like you could from VMWare. So if i ever where to leave the company, and there’s noone with my skillset (which i think is terrible management, having your company depend on the skills of one person). My boss can sleep just as calmly as before, knowing we pay Proxmox for support.

Unlike VMWare, paying for support from Proxmox will likely get you answers. Hell, they fix problems for unsubscribed users in a pretty timely manner.

I fought with VMware for years to get bugs fixed or even acknowledged, it eventually drove me out of the space because they were pretty much the only solution for inhouse virtualization and they did. not. give. a. fuck. about SMB.

I’d take a community/supported hypervisor long before a VMware ever again. I’ve never felt frustrated trying to get something FOSS fixed like I have with companies like VMware and Microsoft.

God damn Linux admins are pretentious.

Thankfully i don’t consider myself a Linux admin. So i take no offense.

I suppose thats the difference between Windows admins and Linux admins. Windows admins are used to click their way though things with fancy gui’s and wizards :)

That hasn’t been a thing for a decade. Any time you run into an issue or need to do something on mass you fire up PowerShell

I know plenty of Windows admins who still dont use Powershell as much as they could/should. Which i think is odd, since Powershell is so much better, but i suppose its not easy to change old ways :)

This seems like an unnecessary dichotomy. Infrastructure has to be maintained, period. If you don’t want to maintain it yourself, pay a provider to maintain it for you. If you want to maintain it yourself, you damned we’ll be interested in understanding all the parts of it. Setting up a hypervisor in the office to ‘set it and forget it’ is not the way to do this.

Be wary or leery of making changes, not weary.

Good catch

I hear it all the time, and it jumps out at me. Sorry for being that guy lol.

Been using KVM fir years. Works fine for me.

I assume you are using libvirt? Do you setup VM fail over?

If you want something more appliance-like, XCP-ng is a very good option. The GUI of Xen Orchestra is also closer to vCenter in my opinion and should be easier to navigate than Proxmox.

It has a much higher barrier to entry. If they can make it easy to get on a makeshift network made of old hardware then I might look more into it. I want to use it for personal use before I even think of prod. Proxmox has been in homelabs for a while which helps quite a bit to mature the product.

It’s definitely more enterprise focused, but it’s using Linux, so there’s no reason why any old hardware couldn’t work.

The enterprise focus might make it easier for those coming from VMware in any case.

I’m not sure I’m parsing your fifth paragraph correctly. Are you suggesting Proxmox is DIY and unsuitable for Production? That Proxmox is suitable for Production and those who think they can roll their own hypervisor are in for a bad time? Something else?

What I am saying is that it depends. Proxmox is rock solid if you know what you are doing. It is a massive train wreck of you do the set it and forget it approach. Deploying Proxmox requires planning and a understanding on the system.

So yes it is good for production as long as you understand how it works.

OK. So we have a disagreement then. What part of Proxmox requires expertise?

You need to understand Debian and virtualization and it would great if you understood Linux storage.

There is a lot to learn about Proxmox specifically. It has its own features and tools that are important to understand. (Such as how to fix a broken cluster)

That’s no different from VMware or Hyper-V if you switch the specifics around. There are many more administrators running virtualization clusters that have very little knowledge of the internals than there are subject matter experts or weekend deep divers. The barrier to entry for these things is low because they’re designed well enough and half decently documented. Proxmox isn’t unique in this respect.

I’m going to be evaluating Nutanix and Azure Stack HCI. Proxmox just doesn’t fit in what I can find support for and admins to support it.

Nutanix is not especially cheap, in my opinion/experience, nor is it particularly easy to manage and maintain

I managed about 4 clusters form some time and found it pretty simple. As for cost, it’s more about getting away from VMware.

Are you Azure focused?

If you are an Azure shop go with Azure Stack HCI. I haven’t use it personally but I see a lost of Reddit comments about it.

I have made a comparison in recent weeks between proxmox and xcp-np/Xen Orchestra and for me proxmox is not mature enough for a work in production considering different aspects. Xcp-ng, if I see it as a solid option, especially if you pay for the Xen Orquestra subscription, which in addition to unleashing the integral management of your entire xcp-ng park, also allows you to make backups

Proxmox sortof mangles the kernel and I find it frustrating to use from the command line. (I have also blown off my foot once or twice.) I would use Incus instead. Incus doesn’t require its own distro, so you could install it on an immutable distro.

If I were purely running VMs that didn’t need access to USB hardware I might go with XCP-ng.

I personally don’t see much reason to use LXC. Sure it theoretically is faster but it creates lots of headaches. I do use LXC but for only for simple things like jump boxes.

Look at Openshift if you’re looking for immutable, production ready Linux infrastructure. Containers are quickly replacing VMs.

Containers run on top of VMs.

I have run plenty of clusters on bare metal, both Openshift and vanilla. No VMs are needed.

That’s a pain though. When you add new hardware there is more setup. With virtualization you can just transfer VMs to the hardware. Also you can setup templates and automation for VM creation

Eh, they’ve thought of that.

Openshift uses immutable os images and can install over PXE. You can even automate deployment using ipmi/idrac, though that was really buggy when I tried it in 2022.

Hyper-V is probably the best for Windows shops but my concern is that it will just become Azure tied at some point. I could be wrong but somehow I don’t trust Microsoft to not screw everyone over.

Funny you should say this, because they are actually working on a Hyper-V stack with Intel’s Cloud Hypervisor (VMM) atop the Microsoft Hypervisor microkernel (MSHV) with support for Linux as the root partition (i.e. “Dom0”). No Windows/Azure required.

https://scholz.ruhr/blog/hyper-v-on-linux-yes-this-way-around/

https://www.phoronix.com/news/Microsoft-Hyper-V-Dom0-Linux

At this point the biggest hurdle is the microkernel being publicly released as a standalone component.

what are your thoughts on Unraid as an alternative?

Not for enterprise, but if you just need some general VM’s and docker services with good redundancy, it’s a really good product.

Running on Debian is pretty close to immutable, as long as you don’t do anything silly with the underlying OS besides run Vanilla proxmox. Reinstalling Proxmox and restore /etc/pve is as hard as it gets, and if you get really fancy you can install the PBS client right on the nodes and back them up fully to the same place you backup the guests.

I’ve used Proxmox for years now (used to mod the reddit /r/proxmox sub along with Jim Salter) and I would be comfortable running it in enterprise in anger. As a former VCP, I appreciate the transparency of Proxmox, and like you say, it’s really just an amalgamation of standard services with a nice front end and a bunch of automation in the background that you can tear into if you need to.

And Proxmox Backup Server is everything I ever wanted in a backup system for it, I’d put it head to head with Veeam and other VMWare solutions.

Promox is not immutable. Immutable Linux uses a read only filesystem. They normally have automatic role backs and updates usually take the form of deltas. (Not always)

The benefit is that the system is highly predicable and updates only apply on a reboot. If there is a bad update it just fails the self test and then rolls back. This is way more dynamic than stock Debian.

I think you might be confusing stability with immutably

Of course I realize that. But Debian is perfectly fine as a base for proxmox because of its stability, and if you aren’t doing silly things like installing docker on the host, its not going to be an issue.

It can be though if you start messing around. For instance I had an issue with a newer kernel so I downgraded only to find that it wouldn’t boot. (ZFS can be upgraded but not downgraded)

I guess the big takeaway is don’t shoot yourself in the foot